My 72 Screaming Frog Exclude Rules for Trackers and Pixels

Screaming Frog is one of the most powerful tools in a technical SEO’s toolkit (or any SEO’s toolkit, for that matter). By crawling a site the way a search engine would, we can pinpoint issues with content, site structure, metadata, linking, and countless other ranking factors that even the site owners and developers might be completely oblivious to.

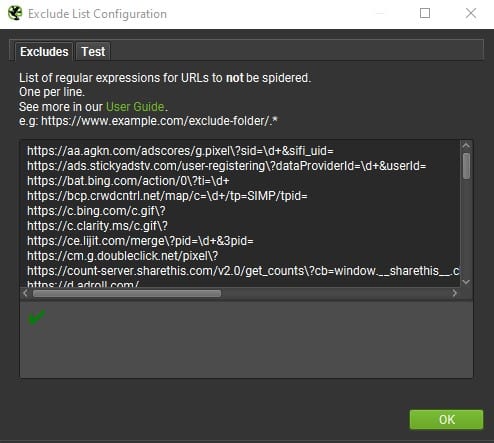

The potential uses for Screaming Frog are limited only by your creativity, but today I want to focus on one feature of the tool: the Exclude setting. Specifically, I want to share my list of exclude rules that I use to remove advertising trackers and pixels.

Table of Contents

Why exclude trackers and pixels from your crawl?

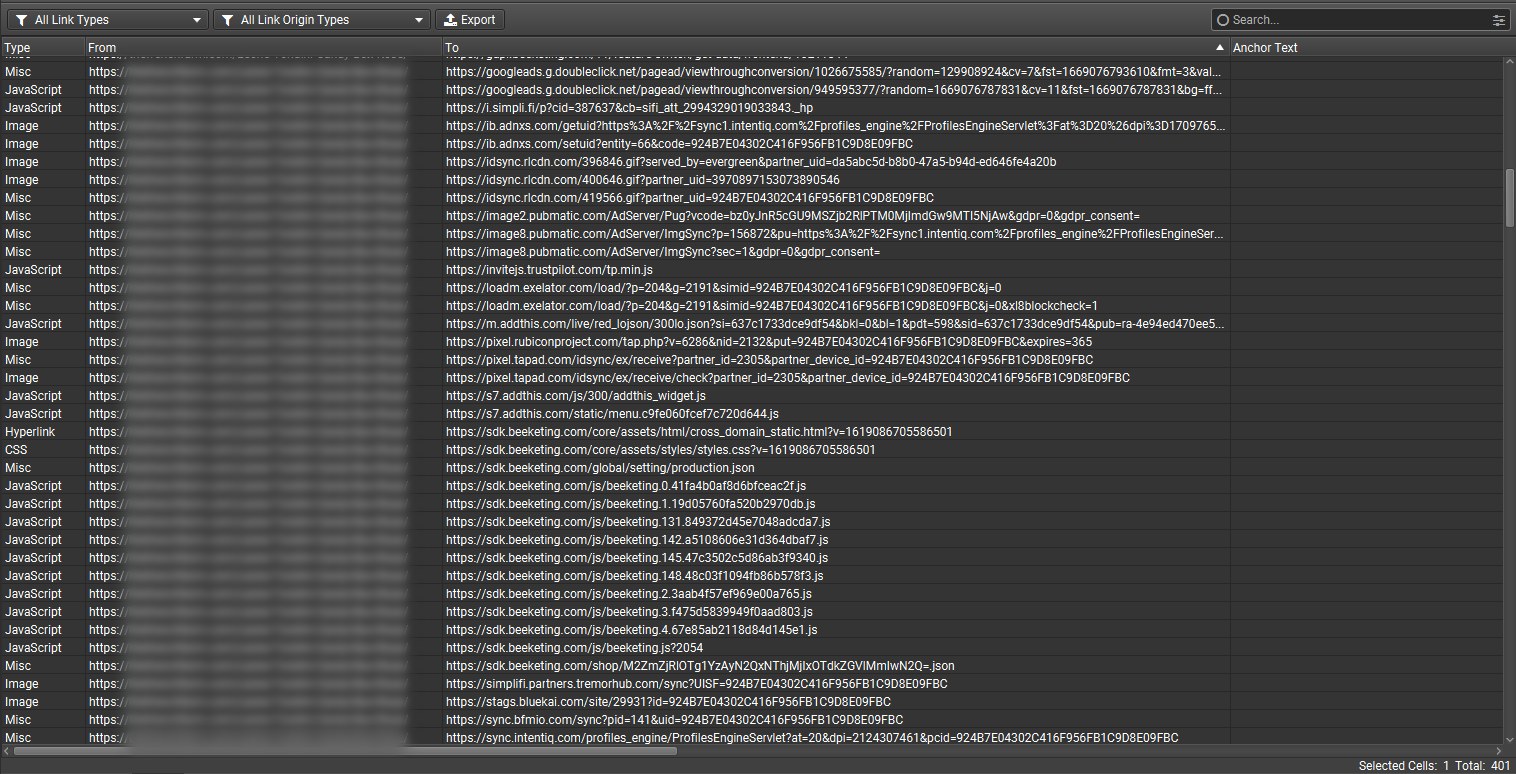

Most websites utilize some form of analytics to track user behavior. If the site’s owners use paid search, paid social, or display advertising, they also probably have conversion tracking set up on the site, which can link a user’s conversion action (making a purchase, submitting a contact form, downloading an app, etc.) to the ad they viewed to get there. These trackers can take the form of JavaScript, images, and other file types, and typically generate a unique URL for each page of the site. On top of that, a lot of trackers will run through a series of redirects, sending “pings” to multiple sites to record the conversion or pageview.

These additional URLs are picked up by crawlers like Screaming Frog and can inflate the total number of URLs to be crawled by 20 times or more. If your site is especially tracker-heavy, which is often the case with ecommerce sites, each HTML page could contain as many as 40-80 unique outlinks to trackers from a variety of domains (and some might even have more). If a site is big enough (10,000+ URLs), you may find that your crawl takes days to complete and results in a crawl file that is several gigabytes in size.

By using the Exclude feature and setting rules to filter out trackers and other extraneous pages, I was able to reduce a crawl with almost 800,000 URLs to 14,000 URLs.

Why not just exclude external links or turn off JavaScript rendering?

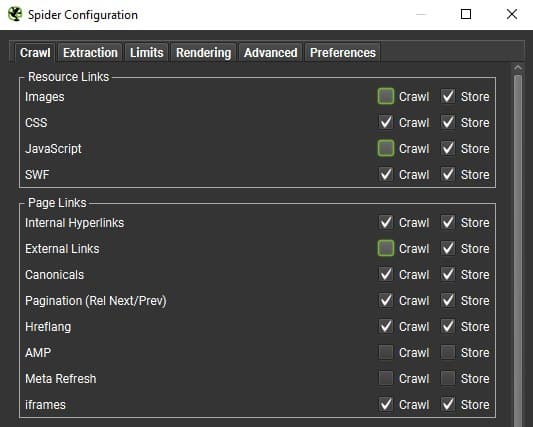

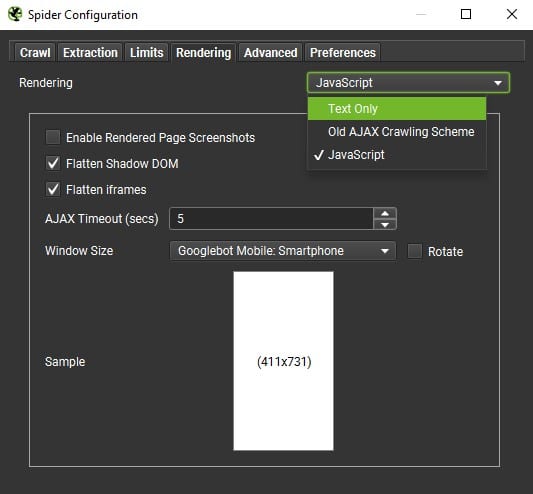

Now there are a few easy ways to avoid crawling these trackers: you could turn off crawling for external links entirely, turn off crawling for JavaScript files or image files to try and avoid pixels, or disable JavaScript rendering entirely.

However, these tactics usually cast too wide of a net. Some sites require JavaScript rendering to load content, metadata, and even internal links. While this is not ideal for SEO, turning off this setting blinds you to any changes made when JavaScript is enabled, which you want to know about when you crawl a site. Also, the “Images” checkbox in the Spider settings only affects URLs discovered via a link in an <img> tag, such as <img src=”image.jpg”>. Pixels are not found via this tag, so this change won’t affect pixels.

As for excluding all external links, this could be a viable option in a pinch, but your crawl results won’t show any external links that lead to 4xx or 5xx errors, and changing this setting can affect page rendering if images, CSS files, and other resources are hosted on another domain.

How I developed this list

When I first run a site crawl in Screaming Frog, I’m usually trying to cast as wide a net as possible to find any pages I can. In this first look, I want to make sure I know everything I can about the site, including all the weird quirks of the CMS and page templates, strange orphan URLs, external links, resource links, or JavaScript files. For that reason, I typically follow the philosophy and recommendations espoused by Max Peters’s Screaming Frog settings guide–one of my favorite Screaming Frog resources–and turn on all of URL discovery settings I can without crashing my computer. This can include:

- Crawling external links

- Crawling AMP pages

- Crawling images

- Crawling iframes

- Crawling pages discovered via Rel Next/Prev

- Following internal and external “nofollow” links

- Crawling and rendering JavaScript

- Ignoring robots.txt

However, this usually leaves me with a massive crawl file that takes up valuable hard drive space AND is difficult to analyze; with so many extraneous URLs floating around, it can be difficult to pinpoint the SEO issues I want to address.

I looked through several crawls like this and identified the tracking URLs out of the external links. Each link typically contains a session ID of some sort, as well as an account ID that will be consistent across the site for that tracking domain (for this reason, I’ve used regular expressions to ensure that each rule works regardless of the domain). All of the URLs in this list have appeared at least once in a crawl, and I have verified each by either visiting the URL and confirming that it is a pixel or by researching the domain and subdomains.

I want to note that the above notwithstanding, I generally leave off these exclude rules the first time I crawl a site. I want the first go-round to show me everything crawlers could see when they peruse the site, warts and all. However, creating a unique list for each site is more time-consuming than you might think, and I’ve found this “master list” to be incredibly useful for subsequent crawls and for when I need to run a crawl quickly on a new site. I also keep it updated over time, since I’m always finding new tracking URLs I haven’t seen before.

How to use this list

Ideally, the first time you crawl a site, don’t exclude any URLs. This way, you’ll at least have an idea of what URLs are being removed with the exclusions. Also, run a crawl without these exclusions at least once a year to make sure nothing new has popped up.

To run the crawl with the exclude rules:

-

- In Spider mode, go to Configuration > Exclude.

- Copy and paste the exclusion list into the box that pops up.

- Set any other necessary configuration settings.

- Run the crawl!

You can pause the crawl and update this list at any time during the crawl, and it should update the list of queued URLs to remove any that match the new rules, but I’m not sure I’ve seen evidence of this.

Claire’s Exclude List for Trackers and Pixels

There are currently 72 rules in my exclude list, and I’m always adding new ones. The very last few rules are not strictly for trackers, but seek to eliminate other extraneous URLs from the crawl like CAPTCHA widgets and social share links.

See something I missed? Let me know!

Without further ado, here is the full list (Regex special characters are in bold and green*):

- https://aa.agkn.com/adscores/g.pixel\?sid=\d+&sifi_uid=

- https://ads.stickyadstv.com/user-registering\?dataProviderId=\d+&userId=

- https://app.signpanda.me/scripttag/product\?shop=[\w.]+&product_uuid=\d+&force_check=true

- https://bat.bing.com/action/0\?ti=\d+

- https://bcp.crwdcntrl.net/map/c=\d+/tp=SIMP/tpid=

- https://c.bing.com/c.gif\?

- https://c.clarity.ms/c.gif\?

- https://ce.lijit.com/merge\?pid=\d+&3pid=

- https://cm.g.doubleclick.net/pixel\?

- https://connect.facebook.net/signals/config/

- https://connect.facebook.net/signals/plugins/identity.js\?v=

- https://count-server.sharethis.com/v2.0/get_counts\?cb=window.__sharethis__.cb

- https://d.adroll.com/

- https://d.agkn.com/pixel/\d+/\?che=

- https://dsum-sec.casalemedia.com/rum\?cm_dsp_id=\d+&external_user_id=

- https://eb2.3lift.com/xuid

- https://fei.pro-market.net/engine\?du=\d+;csync=

- https://googleads.g.doubleclick.net/pagead/viewthroughconversion/

- https://gpush.cogocast.net/

- https://ib.adnxs.com/getuid\?https%3A%2F%2Fsync1?.intentiq.com

- https://ib.adnxs.com/setuid\?

- https://idsync.rlcdn.com/

- https://image2.pubmatic.com/AdServer/Pug\?vcode=

- https://image8.pubmatic.com/AdServer/ImgSync\?p=\d+

- https://js.calltrk.com/companies/\d+/

- https://js.calltrk.com/group/0/\w+/12/

- https://l.sharethis.com/

- https://loadm.exelator.com/load/\?p=\d+&g=\d+&simid=

- https://m.addthis.com/live/red_lojson/300lo.json\?si=

- https://nova.collect.igodigital.com/c2/\d+/track_page_view\?payload=

- https://p.adsymptotic.com/

- https://pbid.pro-market.net/engine\?du=\d+&mimetype=img&google_gid=

- https://pi.pardot.com/analytics

- https://pippio.com/api/sync

- https://pixel.advertising.com/ups/\d+/sync\?uid=

- https://pixel.rubiconproject.com/tap.php\?v=\d+&nid=\d+&put=

- https://pixel.tapad.com/idsync/ex/receive

- https://px4?.ads.linkedin.com/

- https://simplifi.partners.tremorhub.com/sync\?UISF=

- https://stags.bluekai.com/site/\d+\?id=

- https://stats.g.doubleclick.net/r/collect\?v=1&aip=1&t=dc&_r=3&tid=UA-[\d-]+&cid=

- https://sync.bfmio.com/sync\?pid=\d+&uid=

- https://sync1?.intentiq.com/profiles_engine/ProfilesEngineServlet\?at=20&dpi=

- https://sync.outbrain.com/cookie-sync\?p=adroll&uid=

- https://sync.search.spotxchange.com/partner\?adv_id=\d+&uid=

- https://sync.taboola.com/sg/adroll-network/1/rtb-h\?taboola_hm=

- https://syndication.twitter.com/settings\?session_id=

- https://t.sharethis.com/1/d/t.dhj\?cid=\w+&cls=B&dmn=

- https://tag.apxlv.com/tag/partner/222\?c_i=1&ld=1&pixel_mode=pixel&jid=

- https://tag.apxlv.com/tag/partner/222\?c_i=2

- https://tag.cogocast.net/tag/partner/222\?pixel_mode=pixel&dc_id=

- https://tags.w55c.net/match-result\?id=

- https://tags.w55c.net/rs\?sccid=

- https://track.beeketing.com/bk/api/actions.json\?distinct_id=

- https://track.hubspot.com/__ptq.gif\?

- https://um.simpli.fi/

- https://ups.analytics.yahoo.com/ups/\d+/sync\?uid=

- https://us-u.openx.net/w/1.0/sd\?

- https://www.facebook.com/tr/\?a=plbigcommerce1.2

- https://www.facebook.com/tr/\?id=\d+&ev=

- https://www.google.com/ads/ga-audiences\?v=1&aip=1&t=sr&_r=4&tid=UA-[\d-]+&cid=

- https://www.google.com/pagead/

- https://www.googleadservices.com/pagead/conversion/\d+/\?random=

- https://www.googletagmanager.com/

- https://x.bidswitch.net/sync\?dsp_id=\d+&user_id=

- https://x.bidswitch.net/ul_cb/sync\?dsp_id=\d+&user_id=

- https://salesiq.zoho.com/visitor/v2/channels/website\?widgetcode=

- https://twitter.com/intent/tweet\?text=

- https://twitter.com/share\?text=

- https://www.facebook.com/sharer.php\?u=

- https://www.facebook.com/sharer/sharer.php\?u=

- https://www.google.com/recaptcha/

Other exclude rules to consider:

- WordPress files: Check out this article from digital marketer and blogger TJ Kelly for a great, comprehensive list of the URLs to block from a WordPress crawl (which you probably also want to look into blocking in your robots.txt).

- “Add to calendar” links:

- \?ical

- https://www.google.com/calendar/event\?action=

- For events: automatically-generated calendar pages that stretch forever forwards and backwards through time. This will vary based on your URL structure, but for a site using the WordPress plugin The Events Calendar, I added the following exclude rules:

- /events/20\d\d–\d\d–\d\d/ (e.g., /events/2022-12-31)

- /events/category/.+/20\d\d–\d\d–\d\d/ (e.g., /events/category/family/2022-12-31)

- CTA redirects, especially on HubSpot pages (this is an excellent example of pages you wouldn’t want to exclude in your first-ever crawl, because you want to know that this CTA tracking is in place and that all of the links on the page are being redirected at least once), e.g.:

- https://cta-redirect.hubspot.com/cta/redirect/\d+/

- https://cta-service-cms2.hubspot.com/ctas/v2/public/cs/cta-json\?canon=

- /cs/c/\?cta_guid=

- /hs/cta/ctas/v2/public/cs/cta-loaded.js\?pid=\d+8&pg=

- If you’re using the Google Analytics API to crawl new URLs discovered in Google Analytics, you may want to exclude internal search pages from your crawl. Depending on the number of unique searches performed on the site, this could significantly add to the URL queue. You can still view information on common internal search queries in the Google Analytics Site Search report. Example rules:

- \?s=

- \?search=

- \?search_query=

When adding to the exclude list, it’s best to keep your rules as specific as possible to avoid excluding something that you don’t mean to. This could lead to the spider missing sections of the website or, if JavaScript rendering is enabled, it could cause issues with the page content rendering.

*About regex rules:

Screaming Frog uses Regular Expressions in its include and exclude rules. If you aren’t familiar with Regex, I highly suggest you familiarize yourself with this tool, as it can be an incredible time-saver for any data processing and is especially useful for technical SEO. You can learn more about special Regex characters in my favorite Regex cheatsheet, and, as always, the best way to learn is by doing. Here are some of the Regex symbols I used in these rules with an explanation of each:

- ? (Question mark): This is a quantifier indicating that the previous character appears either one or zero times in the string

- ‘apples?’ matches “apple” or “apples”

- ‘px4?.ads.linkedin.com’ matches “px4.ads.linkedin.com” or “px.ads.linkedin.com”

- + (Plus sign): This quantifier indicates that the previous character appears one or more times

- ‘A+’ matches “A” or “AAAAAAAAAAAAAAA”

- \(Backslash): Indicates that the next character should be read literally, typically used with characters that are also Regex special characters. This is especially relevant for question marks in URLs (?), which are a Regex quantifier, but also indicate the beginning of a query string in a URL.

- ‘example.com/?page=’ does NOT match “example.com/?page=1” but does match “example.com/page=1” and “example.compage=1”

- ‘example.com/\?page=’ DOES match “example.com/?page=1”

- [ ] (Square brackets): Match any single character contained within the brackets

- ‘[xyz]’ matches “x” or “y” or “z”

- ‘[\d-]+’ matches one or more characters that are a digit or a hyphen, e.g., “802972-9264” or “123456789-1”

What Do You Think?

Did I miss something in the list? Did I include something that will unintentionally block pages we don’t want blocked? Let me know in the comments!

Great stuff – I’m embarrassed to admit I’ve never considered excluding URLs from my crawls. I’ll give your list a try. Thanks for sharing!